Such a finding would create ground for further research into the possibility of modeling musical systems using information entropy.

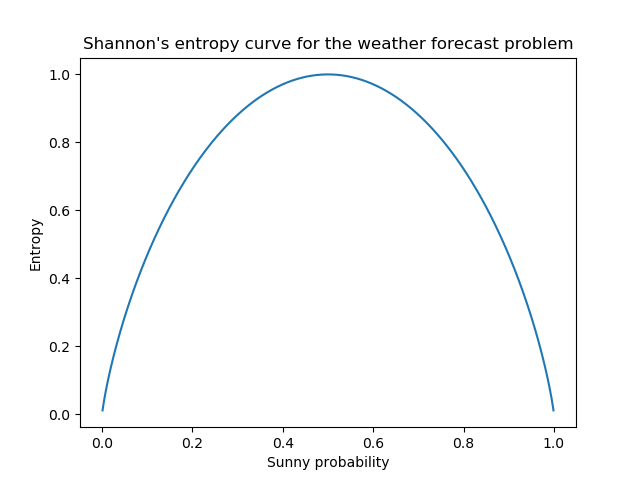

If any do, this would imply that at east some elements of shape note music can be modeled and expressed using information entropy. These entropy values are then put through rudimentary statistical analysis to determine if any pattern or correlation emerges among the entropy values within the shape note tunes. If logarithms are taken to base 2, information is denominated in ‘bits,’ but if natural logarithms are used, the information is denominated in ‘nats. The book explains with minimum amount of mathematics what information theory is and how it is related to thermodynamic entropy. The amount of information gained when we see a w that has probability P(w) turns out to be the logarithm of the probability (notated ‘log P(w)’). In this paper, we introduce the concepts of information entropy, rough entropy and knowledge granulation in rough set theory, and establish the relationships among those concepts. Randomness is a measure of uncertainty in an outcome and thus is applied to the concept of information entropy. Entropy is a measure of unpredictability of information content: compute entropy of the image S entropy (Im) or find where normalized histogram equals zero f find (H0) exclude zero values from computation Hx H Hx(f) compute entropy from the normalized histogram without zero values S -sum (Hx. Rough set theory is a relatively new mathematical tool for use in computer applications in circumstances which are characterized by vagueness and uncertainty. In other words, it is the expected value of the information contained in each message. Over the course of this study, data on pitch and rhythmic entropies are collected for each of the three or four voice parts in a sample of 20 shape note tunes from The Sacred Harp. The average information gain is called ‘entropy.’ The entropy will be lowest when you can usually predict the next symbol with good reliability and highest when you often cannot. In Information Theory, entropy is a measure of unpredictability of information contained in a message. Information entropy as proposed by Claude Shannon, makes an excellent method for objective analysis because it deals directly with probability and uncertainty, which are always present in a field such as music that depends so heavily on human subjectivities. The genre of shape note music has been chosen for its relative simplicity compared to other genres such as the classical symphony, which will render it significantly easier to analyze objectively. Entropy is the measures of impurity, disorder or uncertainty in a bunch of examples. More specifically, this study has been conducted through the collection of data on information entropy in the genre of shape note music. Information gain (IG) measures how much information a feature gives us about the class. This paper will be centered on a case study designed to probe a possible objective method for analyzing music that has already proven itself in the analysis computational systems: information theory. In bounded systems where thermodynamic variables are definable, D - information is proportional to negative entropy.

0 kommentar(er)

0 kommentar(er)